Citation

If you wish, please cite our work (link) as

@INPROCEEDINGS{panerati2021learning,

title={Learning to Fly---a Gym Environment with PyBullet Physics for Reinforcement Learning of Multi-agent Quadcopter Control},

author={Jacopo Panerati and Hehui Zheng and SiQi Zhou and James Xu and Amanda Prorok and Angela P. Schoellig},

booktitle={2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

year={2021},

volume={},

number={},

pages={},

doi={}

}

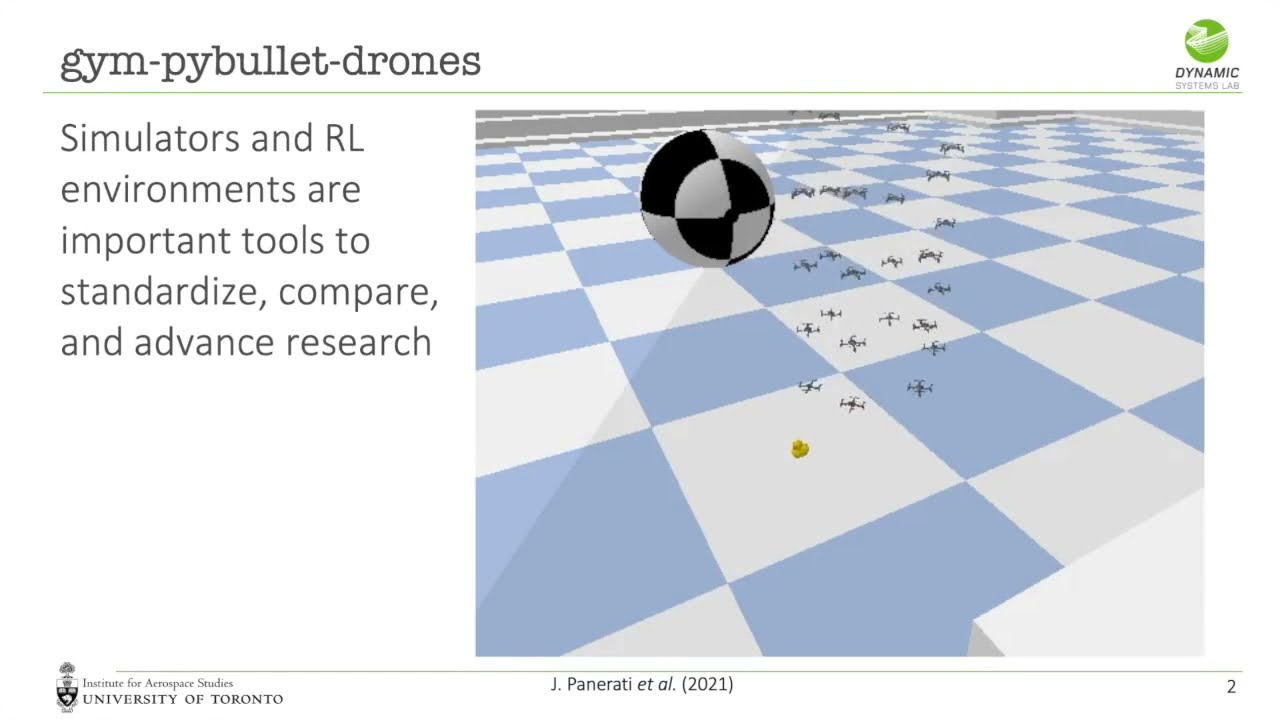

Simple OpenAI Gym environment based on PyBullet for multi-agent reinforcement learning

The default DroneModel.CF2X dynamics are based on Bitcraze’s Crazyflie 2.x nano-quadrotor

Requirements and Installation

The repo was written using Python 3 on macOS 10.15 and tested on macOS 11, Ubuntu 18.04

On macOS and Ubuntu

Major dependencies are gym, pybullet,

stable-baselines3, and rllib

pip3 install --upgrade numpy matplotlib Pillow cycler

pip3 install --upgrade gym pybullet stable_baselines3 'ray[rllib]'

Video recording requires to have ffmpeg installed, on macOS

$ brew install ffmpeg

On Ubuntu

$ sudo apt install ffmpeg

The repo is structured as a Gym Environment

and can be installed with pip install --editable

$ git clone https://github.com/utiasDSL/gym-pybullet-drones.git

$ cd gym-pybullet-drones/

$ pip3 install -e .

On Windows

Check these step-by-step instructions written by Dr. Karime Pereida for Windows 10

Use, Examples, and Documentation

Refer to the master branch’s README.md for further details

Trajectory Tracking

RGB, Depth, and Segmentation Views

Downwash

Learn

Debug

Compare

References

- Nathan Michael, Daniel Mellinger, Quentin Lindsey, Vijay Kumar (2010) The GRASP Multiple Micro UAV Testbed

- Benoit Landry (2014) Planning and Control for Quadrotor Flight through Cluttered Environments

- Julian Forster (2015) System Identification of the Crazyflie 2.0 Nano Quadrocopter

- Carlos Luis and Jeroome Le Ny (2016) Design of a Trajectory Tracking Controller for a Nanoquadcopter

- Shital Shah, Debadeepta Dey, Chris Lovett, and Ashish Kapoor (2017) AirSim: High-Fidelity Visual and Physical Simulation for Autonomous Vehicles

- Eric Liang, Richard Liaw, Philipp Moritz, Robert Nishihara, Roy Fox, Ken Goldberg, Joseph E. Gonzalez, Michael I. Jordan, and Ion Stoica (2018) RLlib: Abstractions for Distributed Reinforcement Learning

- Guanya Shi, Xichen Shi, Michael O’Connell, Rose Yu, Kamyar Azizzadenesheli, Animashree Anandkumar, Yisong Yue, and Soon-Jo Chung (2019) Neural Lander: Stable Drone Landing Control Using Learned Dynamics

- Antonin Raffin, Ashley Hill, Maximilian Ernestus, Adam Gleave, Anssi Kanervisto, and Noah Dormann (2019) Stable Baselines3

- Mikayel Samvelyan, Tabish Rashid, Christian Schroeder de Witt, Gregory Farquhar, Nantas Nardelli, Tim G. J. Rudner, Chia-Man Hung, Philip H. S. Torr, Jakob Foerster, and Shimon Whiteson (2019) The StarCraft Multi-Agent Challenge

- Yunlong Song, Selim Naji, Elia Kaufmann, Antonio Loquercio, and Davide Scaramuzza (2020) Flightmare: A Flexible Quadrotor Simulator

- C. Karen Liu and Dan Negrut (2020) The Role of Physics-Based Simulators in Robotics

Bonus GIF for Scrolling this Far

UofT’s Dynamic Systems Lab / Vector Institute / Cambridge’s Prorok Lab / Mitacs